This story is from Texas Monthly’s archives. We have left the text as it was originally published to maintain a clear historical record. Read more here about our archive digitization project.

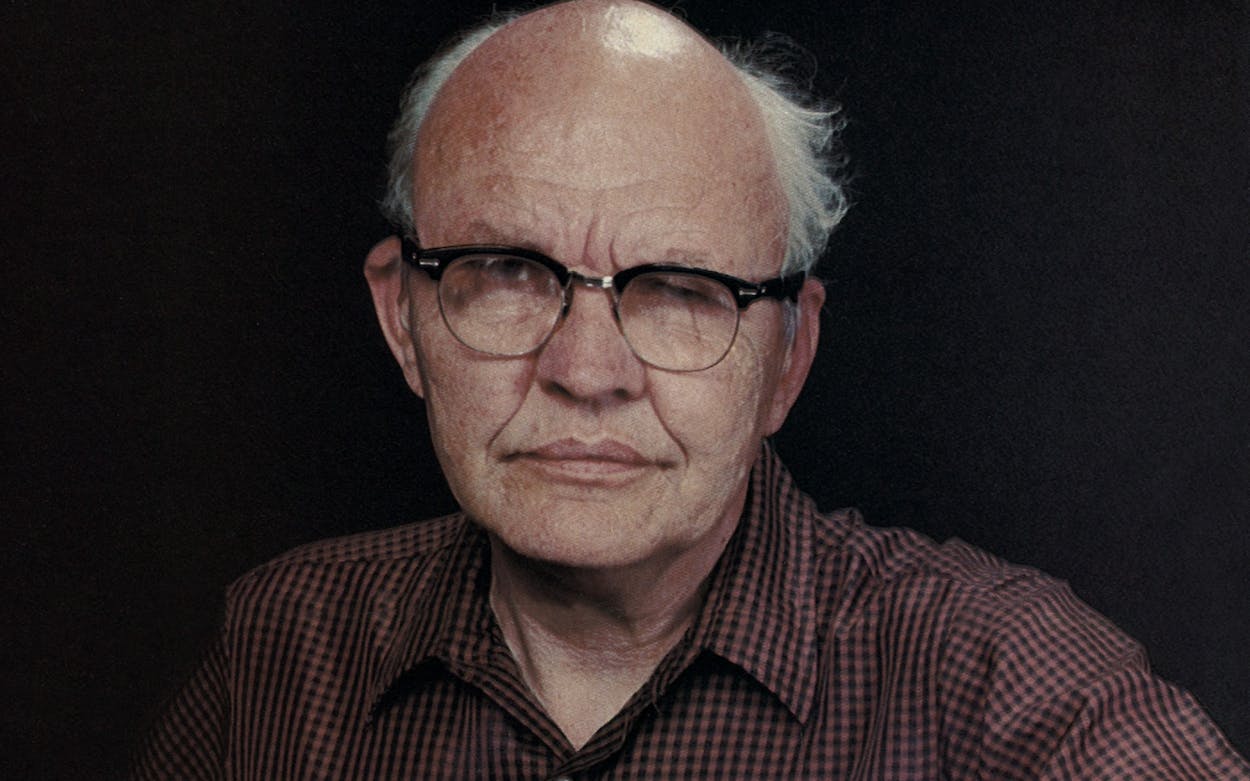

Except for one little hitch, this scene would work nicely in one of those American Express card commercials. Here’s the internationally acclaimed inventor—a man whose idea changed the daily life of the world—walking through the Dallas–Fort Worth Airport, and nobody knows who he is. No eyes light up with the spark of recognition. No kids come up to ask for autographs. Nobody pays any attention at all. Eventually, the tall, balding Texan pulls out his wallet to pay for something, and now at last we find out who he is: Jack St. Clair Kilby.

Okay, there’s the hitch. Even after learning his name, most people have no idea who Jack Kilby is. A century ago, men of his ilk—men like Edison and Bell, whose inventions entered every home and spawned giant industries—were accorded enormous prominence almost overnight. Not so today. It has been not quite a quarter century since Jack Kilby, experimenting on his own in his lab at Texas Instruments, worked out the idea for the monolithic integrated circuit, the semiconductor chip in which the components of an electric circuit (originally a half dozen; today, a quarter million or more) can be put together on a sliver of silicon no bigger than an infant’s thumbnail. It has been a decade since he filed his patent application for the chip’s most famous offspring, the pocket calculator. He has won countless awards. His picture hangs in the National Inventors’ Hall of Fame in Washington between those of Henry Ford and Ernest O. Lawrence, inventor of the atom smasher. Companies around the world—particularly in Japan—have invested billions in his idea. British magazines run full-length profiles of him. Throughout the international electronics community, he is recognized as a founding father of the microelectronics revolution. But outside that technical world—even in Dallas, where he has lived and worked for 24 years—hardly anybody knows who Jack Kilby is.

“The semiconductor chip is the heart and brains of microcomputers and moon rockets, pacemakers and Pac-Man, digital stereo and data transmission networks, industrial robots and independently targeted reentry vehicles.”

Just about everybody, though, knows some of the remarkable devices that Jack Kilby’s minute circuits have made possible. The semiconductor chip is the heart and brains of microcomputers and moon rockets, pacemakers and Pac-Man, digital stereo and data transmission networks, industrial robots and independently targeted reentry vehicles. About ten years ago engineers found a way to put an entire computer on a single chip, and that development has led to so many inventions that the National Academy of Sciences has called microelectronics the second industrial revolution. The hyperbole factories at Time magazine and other such journals regularly refer to the integrated circuit as the miracle chip. But this is a man-made miracle, created on a hot summer day in Dallas by an unknown inventor, Jack Kilby.

At first meeting, Kilby seems an unlikely miracle worker. A lanky 59-year-old with a big, leathery face, black horn-rimmed glasses, and a few wayward tufts of gray hair poking up from his temples, he looks precisely the opposite of high-tech. Plainspoken and plainly dressed, he is a quiet, introverted, grandfatherly type; he talks slowly in a deep, rumbling voice that still has some of the country twang of Great Bend, Kansas, where he grew up. For all his pioneering work in the most modern of technologies, Kilby has an intriguing old-fashioned streak. He won’t wear a digital watch. A computer would be useful in his work, but he doesn’t use one because “I don’t really know how.” Although he is probably the single person most responsible for the demise of the slide rule, he still keeps his favorite Keuffel & Esser Log-Log Decitrig handy in the center drawer of his desk, and in some ways he prefers it to the calculators that rendered it obsolete. “It’s an elegant tool,” he says affectionately. “With the slide rule, there’re no hidden parts. There’s nothing going on that isn’t right there on the table. It has sort of an honesty about it.”

In an industry and a company (Texas Instruments, where he has worked off and on since 1958) where “tough” and “aggressive” are words of high praise, Kilby is a famously nice guy. As he treks through the meandering passageways of TI’s Dallas headquarters, walking with the stooped gait of a man who has bumped his head too often on low ceilings, he greets everybody by name, from vice presidents to stenographers. In discussing his achievements he betrays no hint of self-regard or of hostility toward his competitors. Characteristically, he declines to say a word about the languishing and occasionally bitter corporate controversy that wound through ten years of litigation under the rubric Kilby v. Noyce.

Noyce is Robert N. Noyce, vice chairman of Intel Corporation, one of Texas Instruments’ archrivals. Six months after Kilby first conceived the integrated circuit, Noyce, then at Fairchild Semiconductor, developed a process for integrating circuits on chips that was better in some respects than Kilby’s initial method. Both men were awarded patents (which their companies have freely licensed to others who wanted to make chips) and their firms battled over the rights, more or less inconclusively, all the way to the Supreme Court. Noyce prevailed on most points, but the decision was so narrow it had no commercial impact, and Kilby’s inventorship was never legally questioned. Most textbooks today treat the two men as co-inventors, with Kilby getting credit for the idea and Noyce for the process. But the executives of TI, Intel, and Fairchild have not given up the fight, and they still exchange nasty gibes about the chip’s paternity. Kilby himself, in contrast, speaks highly of Noyce’s work and is generous in describing his contributions to integrated circuit technology. Noyce, for his part, is equally generous, readily agreeing that Kilby developed the first working chip.

The ongoing microelectronics revolution can fairly be considered the supreme practical application of the “new physics” of the twentieth century; until people understood the complexities of quantum mechanics, it was impossible to understand the movement of electric charges through semiconductors, which are elements that don’t normally conduct electricity but can be induced to under special conditions. Thus Kilby has been working at or near the front lines of physics most of his professional life. But he is not a scientist. He is quite firm on the point, preferring instead the more workaday term “engineer.” “There’s a pretty key difference,” Kilby says. “A scientist is motivated by knowledge; he basically wants to explain something. An engineer’s drive is to solve problems, to make something work. Engineering, or at least good engineering, is a creative process.”

Kilby has done a good deal of thinking about that process, and true to form, he has worked out a careful theory of the art of solving a problem, technical or otherwise. To simplify it considerably, the method involves two stages of concentrated thought. In the first, the inventor has to look things over with a wide-angle lens, hunting down every fact that might conceivably be related to the problem. “You accumulate all this trivia,” Kilby says, “and you hope that sometime maybe a millionth of it will be useful.” Then the Kilby system requires switching to an extremely narrow focus, thinking strictly about the problem and tuning out the rest of the world. The inventor also has to tune out the obvious solutions, because if the problem is of any importance, all the obvious answers have probably been tried already. Then, if his preparation has been broad enough, and he has defined the problem correctly, and he focuses on the right questions, and he’s creative, and he’s lucky, he might hit upon a nonobvious solution that works. But that’s not enough, at least not for an engineering problem. The engineer’s solution has to be cost-effective as well. “You could design a nuclear-powered baby bottle warmer, and it might work, but that’s not an engineering solution,” Kilby says. “It won’t make sense in terms of cost. The way my dad always liked to put it was that an engineer could find a way to do for one dollar what everyone else could do for two.”

Kilby’s dad was a working engineer who rose to the presidency of the Kansas Power Company, a Great Bend–based electric utility that had small power plants scattered around the western part of the state. In the summers, father and son used to traverse the plains in a big 1935 Buick, stopping to inspect a faulty transformer or to crawl through the works of a new generating station. When the great ice storm of ’37 swept through Kansas, felling telephone lines and blocking roads, the senior Kilby used a neighbor’s ham radio to keep track of his far-flung operations. Jack was quickly hooked. By his junior year at Great Bend High he had built a transmitter, had passed the test for his operator’s license, and was conversing with other radio buffs all over the Midwest. It was clear by then that Jack would make a career in electrical engineering, and he set his sights on the engineer’s mecca, the Massachusetts Institute of Technology.

At this point our inventor’s story takes a downward turn. Because his high school did not offer all the math that MIT required, Kilby had to travel to Cambridge to take a special entrance exam. He flunked it. Looking around for a college that would take him at the last minute, he ended up at the University of Illinois. Four months into his freshman year, the Japanese attacked Pearl Harbor; student Kilby became Private Kilby, assigned to a dreary job repairing radio transmitters at a remote Army outpost in northeastern India. After the war, Kilby returned to Illinois, eager to learn about wartime advances in electronics, particularly radar, one of the first electronic devices to use semiconductors. There were people at Illinois teaching semiconductor physics as an application of quantum theory, but such sophisticated scientific fare was off limits to an electrical engineering major. Kilby graduated in 1947 with a traditional engineering education and decent but not outstanding grades. He went to work for Centralab in Milwaukee, for the excellent reason that it was the only firm that offered him a job.

As such things often do, the job turned out to be a perfect spot for an engineer who would (though he didn’t know it at the time) one day tackle the concept of integrated circuitry. Centralab then was producing electric parts to be wired into hearing aid, radio, and television circuits. It was an intensely competitive business, and Centralab was working on the notion that it could save money if all the parts of a circuit—carbon resistors, glass vacuum tubes, silver wires—were produced on a single ceramic base in one manufacturing operation. The firm had only mixed success, but the conceptual seed—that the components of a circuit need not be manufactured separately—was to stay with Kilby and bear important fruit.

At Centralab, too, Kilby learned the invaluable, confidence-building lesson that he had the creative talents a good engineer needs. Centralab’s process for making resistors involved printing small patches of carbon on a ceramic base; the machinery was not very exact, though, so no two carbon patches were the same size. That made resistor performance unpredictable. Kilby was assigned to find a simple, cheap, precise way to make all the resistors the same size. Starting off with his wide-angle review of the problem, the young engineer read everything he could find that might possibly be applicable. Somehow he came upon an article about a new dental technique that used tiny, precisely gauged sandblasters to scour away the decayed part of a tooth. The idea never caught on with dentists, whose patients found it repulsive. But Kilby managed to track down some of those precision sandblasters. That nonobvious solution turned out to be the perfect answer for making all the carbon resistors exactly the same size.

The Death of the Tube

Kilby had been on the job for less than a year when the world of electronics was struck by a thunderbolt, one of those rare developments that change everything. It was, indeed, a seminal event of postwar science: the invention of the transistor.

Until the transistor came along, electronic devices, from the simplest AM radio to the most complex computer, were all based on vacuum tubes. You may remember them if you examined a television or a radio sometime before the seventies; when you turned on the switch, you could look through the holes in the back of the set and see a bunch of little orange lights start to glow—the filaments inside the vacuum tubes.

The tube was essentially the same thing as a light bulb: inside a vacuum sealed by a glass bulb, current flowed through a wire filament, heating the filament and giving off incandescent light. Very early in the life of the light bulb, Edison noticed that the filament was also giving off a steady electric charge. Edison didn’t understand what was happening (physicists now say the charge is a flow of electrons “boiling” off the filament). But he guessed, correctly, that the phenomenon could be useful and patented it in 1883.

The radio pioneers of the early twentieth century found that if you ran some extra wires into the bulb to take advantage of this “Edison effect” current, the vacuum tube could perform two electronic functions. First, it could pull a weak radio signal from an antenna and strengthen, or amplify, it enough to drive a loudspeaker, converting an electronic signal into sound loud enough to hear. This made radio, and later television, workable. Second, a properly wired tube could switch rapidly from on to off (because of its ability to turn a current on or off, the vacuum tube was known in England as a valve). This capability was essential for digital computers, which make logic decisions and carry out mathematical computations by various combinations of on and off signals. It is a tedious process, and to be useful, a computer needs to switch signals at tremendous speeds. The vacuum tube filled the bill.

Efficient though they were for lighting rooms, vacuum tubes had serious drawbacks in other applications. They were big, expensive, fragile, and power hungry. If a lot of tubes were grouped together, as in a computer or a telephone switching center, all those glowing filaments gave off intolerable heat. As we all know from the light bulb, tubes have an exasperating tendency to break or burn out. The University of Pennsylvania’s ENIAC, the first important digital computer, which used 18,000 tubes, never lived up to its potential, because tubes kept burning out in the middle of its computations.

The transistor, invented at Bell Telephone Laboratories in 1947 by a team headed by William Shockley, changed all that. The transistor was based on semiconductor physics. It achieved amplification and rapid on-off switching by moving electric charges along controlled paths in a solid block (hence the term “solid state”) of semiconductor material—usually germanium or silicon.

With no glass bulb, no vacuum, no hot filament, no warm-up time, and a weight and size that were a fraction of the tube’s, the transistor was a godsend to the electronics industry. By the late fifties, solid state was quickly becoming the standard for radios, hearing aids, and other small devices. The computer industry happily embraced the transistor. The military, which needed small, low-power, long-lasting parts for ballistic missiles and the nascent space program, provided a major market as well.

“Kilby had gone the next step beyond all the other scientists and engineers who had been talking and writing about integrated semiconductor circuits. He had invented the engineering solution, and it was elegantly simple. ”

But the transistor had a drawback of its own, which becomes clear if you picture the making of an electric circuit. Building a circuit is like building a sentence. There are certain standard components—nouns, verbs, and adjectives in a sentence; resistors, capacitors, diodes, and transistors in a circuit—each with its own function. In a circuit, a resistor restricts the flow of electricity to a desired amount, giving precise control over the current at any point. A capacitor stores electrical energy and releases it when it’s needed. A diode is a filter that blocks one type of current but permits another type to pass. A transistor can be an amplifier or a switch. Each of them traditionally was manufactured separately from different materials. By connecting the components in different ways, you can get sentences, or circuits, that perform different functions.

Writers of sentences are taught to keep their designs short and simple. This rule does not apply in electronics. Some of the most useful circuits are big and complicated, with hundreds or thousands of components wired together. That was the problem with the transistor. It opened vast possibilities for new and improved electronic applications, but many of those applications required large numbers of transistors wired into circuits with equally large numbers of resistors, capacitors, and diodes. The components could be mass-produced, but the wiring was mainly handwork, with resultant problems of time, cost, and reliability.

And so the electronics industry faced a dilemma. Designers could sit down at the drafting board and draw up exotic transistorized circuits that represented great advances over anything that had been designed before, but their circuits would be too costly and too difficult to produce. In the mid-fifties, people were already planning the computers that would guide a rocket to the moon. But those plans called for circuits with 10 million components. Who could make a circuit like that? How could it fit into a rocket? By the late fifties, this gap between what could be designed and what could practically be produced had put a near-total block in the path of progress in electronics. The engineers called the problem the tyranny of numbers.

The search for a solution became a high-priority item throughout the industrialized world. The U.S. Air Force placed a multimillion-dollar bet on a concept called molecular electronics; despite some breathless press releases promising that a breakthrough was right around the corner, nothing useful ever came of the idea. The Army, in classic fashion, refused to have anything to do with the Air Force project and pursued two quite different ideas of its own. The Navy went off in another direction.

Throughout the fifties, scientists and engineers were thinking about some kind of integrated electronics package in which the components and their connecting wires could all be produced at once. G. W. A. Dummer, a physicist at Britain’s Royal Radar Establishment, suggested in 1952 that “it seems now possible to envisage electronics equipment in a solid block with no connecting wires.” Around the same time, when engineers at Bell Labs developed a precise way to diffuse desired impurities into a block of semiconductor material, it became possible to build a silicon block with neatly segregated regions of positively charged (P-type) and negatively charged (N-type) silicon. That got engineers thinking about the electronic functions that might be performed by various arrangements of P-type and N-type regions. There was, in short, considerable intellectual ferment, and there were all sorts of proposals for circumventing the tyranny of numbers.

But all those ideas were pie in the sky. There were circuits by the dozens on paper, but there was not a single engineering solution, because nobody knew how to make any of those paper circuits come to life. The Royal Radar Establishment awarded a contract for the development of Dummer’s solid block circuit: the only result was a model that was never successfully built. The clear need was to design and produce something that worked. To do that, several electronics firms, including Texas Instruments, launched their own research efforts. At TI the project was placed under the direction of Willis Adcock, a zesty, fast-talking physical chemist. He immediately set out to recruit a task force. One of the men Adcock hired was a lanky, quiet 34-year-old engineer from Milwaukee named Jack Kilby.

Alone in the Lab

Kilby came to Dallas in May 1958 with a decidedly vague mission. Adcock’s group was working, under an Army contract, on an idea called the micromodule. The theory behind it was that all the components of a circuit could be manufactured in the same shape and size, with wiring built into each component. The parts could then be snapped on top of one another like a kid’s Lego blocks, with no need for individual wiring connections. A lot of people were excited about the micromodule, but Jack Kilby wasn’t one of them. For one thing, it didn’t deal with the main problem: huge numbers of individual components. Further, it bore some resemblance to an idea that had failed at Centralab. “In retrospect,” Kilby says, “it’s hard for me to see why I wanted to spend any time on ideas of that type at all. But I made that choice.” The lure of a prestigious firm like Texas Instruments and the opportunity to work on the most important problem in electronics overcame his distaste for the micromodule.

Texas Instruments then had a mass-vacation policy; everybody took off the same few weeks in July. Kilby, who hadn’t been around long enough to earn vacation time, was left alone in the semiconductor lab. If he was ever going to find a serious alternative to the micromodule, here was the chance.

He plunged in with his wide-angle approach, soaking up everything he could about the problem at hand and the ways Texas Instruments might solve it. One of the things he did was to think through his new firm’s manufacturing profile. The obvious fact that emerged was TI’s heavy commitment to silicon. The company had invested millions of dollars in processes and machinery for producing high-purity silicon, and it was also the world’s leading manufacturer of silicon transistors. “If Texas Instruments was going to do something,” Kilby says now, “it probably had to involve silicon.”

Fair enough. What could you do with silicon? Obviously transistors could be made of it. Silicon diodes were also fairly common. Kilby had designed some unconventional capacitors when he was at Centralab, and thinking back on that work he hit on the idea that a capacitor could be made of silicon, too. Its performance wouldn’t approach that of the standard metal-and-ceramic capacitor, but it would do the job. For that matter, you could also make a silicon resistor; not as good as the carbon or nichrome resistors, but it would work. And come to think of it—here was the idea that was to revolutionize electronics—if you could make all the essential parts of a circuit out of one material, you could probably manufacture all of them, all at once, in a single block of that material. The more Kilby thought about it, the more appealing this notion became. If all the parts were made in a single integrated chip of semiconductor material, you wouldn’t have to wire anything together; connections could be laid down internally within the semiconductor chip. And without wires or wiring connections, you could get many parts into a small piece of silicon. On July 24, 1958, Kilby scrawled in his notebook the central idea: four kinds of “circuit elements could be made on a single slice”; he made rough sketches of how each of the components could be implanted in the silicon chip.

It was an elegantly simple idea, and like all great inventions, it broke the scientific rules of its day. “Nobody would have made these components out of semiconductor material then,” Kilby explains. “It didn’t make very good resistors or capacitors, and semiconductor materials were considered incredibly expensive. To make a one-cent carbon resistor from a good-quality semiconductor seemed foolish.” Kilby wasn’t even fully convinced it would work: “It was early enough that you couldn’t be sure that there weren’t some real flaws in the scheme somewhere.” The only way to find out was to have a model built; Kilby would need his boss’s okay to do that.

When everyone returned from vacation, Kilby showed his notebook sketches to Willis Adcock. “My attitude was, you know, it’s something,” Adcock recalls. “It was pretty damn cumbersome, you would have had a terrible time trying to produce it, but . . . let’s try it and see if it works better than anything else.”

Ironically, Texas Instruments, the great silicon house, did not have a suitable bar of silicon around for experimentation that summer, so the first model of Kilby’s circuit was built on a small piece of germanium, a semiconductor with qualities close to those of silicon. The design that Adcock and Kilby chose for the first test was a “phase-shift oscillator” circuit, a classic unit for testing purposes because it involves all the basic components of a circuit. If it works, the oscillator will transform a steady, direct electric current—the kind of constant current that comes from a battery—into fluctuating, or oscillating, waves of power. The transformation shows up on an oscilloscope, a piece of test equipment that displays electric currents graphically on a screen. If you hook a battery directly to the oscilloscope, the steady current will show up as a straight horizontal line on the screen. But if you put an oscillator between the battery and the oscilloscope, the oscillating current will show up as a gracefully undulating line—engineers call it a sine curve—waving across the screen.

On September 12, 1958, a group of Texas Instruments executives gathered in Kilby’s area at the lab to see if the new species of circuit would really work. Conceptually, of course, Kilby knew it would, but he was still nervous as he hooked up connections from a battery to the input terminals of his oscillator-on-a-chip, which was less than half the size of a paper clip. That done, he ran wires from the output side of his chip to the oscilloscope. Immediately a bright green snake of light started undulating across the screen in a perfect, unending sine curve. The integrated circuit worked. The men in the room looked at the oscilloscope, at the chip, at the oscilloscope again; then everybody broke into broad smiles. A new era in electronics had begun.

The Debut

Kilby had gone the next step beyond all the other scientists and engineers who had been talking and writing about integrated semiconductor circuits. He had invented the engineering solution. On paper, at least, Kilby’s first circuit was not nearly so neat or easy to manufacture as the idealized solid-state circuit that Dummer and other physicists had been writing learned papers about. Kilby’s circuit had only one advantage, but that was the crucial one: it worked.

Today the integrated circuit is the stuff of virtually all electronic gear. For an engineer to design a circuit for a robot or a rocket guidance system using individual components would be like hitching up a horse and buggy for a drive on the interstate. Back when the newborn device made its debut before electrical society, the reception was frosty. The coming-out party took place at the Texas Instruments booth of the Institute of Radio Engineers convention in the spring of 1959. “It wasn’t a sensation,” Kilby recalls dryly. “There was a lot of flak at first.” Critics said the new circuits would be difficult to produce. Besides, they could not perform as well as conventional circuits, in which each part was made of the optimum material for its particular function. To the electrical engineering community, the whole concept was threatening: if component manufacturers like Texas Instruments started building complete circuits, lots of circuit designers would be out of work. “These objections were difficult to overcome,” Kilby wrote later, “because they were all true.”

Anxious for customers, Kilby and Adcock took their model circuit to the military. After initially rejecting the idea, the Air Force, which was starting to lose faith in its cherished “molecular electronics,” agreed to help pay for further development. (Six years later, the Air Force’s public relations wing put out a book on microelectronics: “The development of integrated circuits is, in large part, the story of imaginative and aggressive management by the U.S. Air Force.”) As engineers learned how—thanks mainly to the process developed by Robert Noyce at Fairchild—to produce chips cheaply and to cram more and more components onto each chip, the product literally took off. The Minuteman missile system and the Apollo lunar mission were the first big projects to rely on integrated circuitry. By 1981, sales of chips alone, leaving aside the myriad products they were used in, reached $14 billion a year. The chip has been a glowing testament to man’s ingenuity and productivity; capacity has doubled and prices have been cut in half almost every year. If Detroit had matched the semiconductor industry’s productivity over the past two decades, the 1982 Cadillac would cost about $80 and get eight hundred miles to the gallon.

Texas Instruments’ first commercial integrated circuit was a simple “flip-flop” device, an on-off switch for digital logic in computers. It contained two transistors, six resistors, two diodes, and two capacitors. Within a few years, TI and Noyce’s firm, Fairchild, were selling chips with 100 components. By the mid-sixties, there were 1000 components in a single integrated circuit; the engineers called that medium-scale integration. By 1970, large-scale integration techniques had crammed up to 10,000 components on the same tiny chip. The current era, very large-scale integration, has brought chips with 250,000 or more components. Ultralarge-scale integration—a million components on a quarter-inch-square chip—is waiting in the wings.

Though it seems strange now, TI had trouble at first getting the chip into consumers’ lives. There had been the same problem with the transistor; in 1954, TI president Patrick Haggerty decided to promote it by putting it into a low-priced consumer product—the portable radio. A decade later, Haggerty decided to replay the scenario with the integrated circuit. He began searching for a good product that would put the chip into every home. One day late in 1964, Haggerty called in Jack Kilby and asked him to invent a small, cheap electronic calculator.

“I sort of defined Haggerty’s goal to mean something that would fit in a coat pocket and sell for less than $100,” Kilby recalls. It was a formidable assignment. The only electronic calculators available then were desk-top models, bigger and heavier than a typewriter and priced at $1200 or more. They had racks and racks of electronic components; they used ten, twenty, even thirty feet of wiring to carry information from the keyboard to the computational unit to the screen. The screen where answers were displayed was a miniature television set, heavy, expensive, and fragile. Clearly, Kilby would have to come up with a whole new kind of machine.

Texas Instruments, which yields nothing to the CIA when it comes to secrecy, put Kilby in a shrouded office and ordered him always to refer to his project by a code name. An earlier TI project had been called Project MIT, so Kilby took the logical next step and named his effort Project Cal Tech. “It was a miserable choice,” he says now. “Anybody who heard it could have figured out that we had a crash project going on calculator technology.” In any case, Cal Tech moved ahead. Working with Jerry Merryman, an expert on math and logic circuits, and James Van Tassel, who was responsible for the keyboard, Kilby started chopping away at the existing calculators, cutting $15 worth of parts here, $1.42 worth of wiring there, 78 cents’ worth of components somewhere else. The team reduced all the computational circuitry to four quarter-inch-square chips (one chip in later models); the connections from keyboard to chip to screen were reduced to a few inches of aluminum; answers were displayed on a strip of paper by a printing process a hundred times smaller and lighter than the TV screen in the earlier calculators.

Kilby had the job pretty much done by 1967, but the electronics were so far ahead of their time that the Pocketronic calculator was not officially introduced in stores until April 14, 1971 (the idea was to attract taxpayers working late that night on Form 1040). By current standards, that first model was a dinosaur, a four-function model that weighed about two pounds and sold for about $150; on the day this is being written, K-Mart is advertising a four-function calculator about one-tenth the size of the Pocketronic for $5.95. But Kilby’s design is still the gist of all calculators, and even today most TI models carry patent number 3,819,921—the Kilby patent for a “miniature electronic calculator.” Last year TI sued Casio, the Japanese calculator leader, for patent infringement, citing the Kilby patent.

A New World

As has rapidly become clear, the calculator represented only the tiniest opening wedge for the uses of the chip in everyday life. Two important applications of semiconductor technology—transducers and microprocessors—have taken the chip into thousands of new devices. A transducer is a device that changes one form of energy into another, like a telephone receiver that converts your voice into electrical impulses. There are sensors today that can turn sound, heat, pressure, light, or chemical stimuli into electronic pulses. This information can be sent to a microprocessor—an ultracomplex chip that holds an entire computer’s decision-making circuitry—that in turn decides how to react to its environment. A heat-sensitive transducer can tell whether your car’s engine is burning fuel at peak efficiency; if it is not, logic circuits in a microprocessor adjust the carburetor to get the optimum mixture of fuel and air. A light-sensitive transducer at the check-out stand reads the Universal Product Code on this magazine and tells the microprocessor to add $2 to your bill. A microprocessor in the head of the Exocet missile takes in radar pulses and adjusts speed and direction so that it will smash the destroyer squarely amidships. Detroit is about to introduce a talking car, with electronic voice simulators (controlled by microprocessors) that softly remind you to buckle up or buy a quart of oil. This spring, Ford unveiled a prototype listening car; you say, “Wipers,” and a sound-sensitive transducer sends impulses to a microprocessor that turns on the windshield wipers.

Chips may soon make possible the talking mute and the listening deaf man. A speech-synthesizing chip connected to a palm-size keyboard can “talk” for a person who has lost his larynx. A chip that turns sound into impulses that the brain can understand is in the works—not just a hearing aid but an electronic ear that can replace a faulty organic version. There has been some success in hooking a light-sensitive chip to a microprocessor to send impulses to the brain—in other words, an implantable electronic eye for the blind. A Scottish firm has developed a chip-based sensor to deal with another medical problem: a heat-sensitive chip sewn into a bra is supposed to warn the wearer when it’s a dangerous time of the month to make love.

Grad students will be turning out dissertations for decades on the social, economic, and philosophical impact of the microelectronics revolution. One development that seems clear already, though, is that thanks largely to the integrated circuit, 1984 will not be 1984.

There was a time—when computers were huge, impossibly expensive, and daunting even to experts—when sociological savants regularly warned that ordinary people would eventually become pawns in the hands of the few Big Brothers, governmental and corporate, that could afford and understand computers. Computer pioneers in the forties predicted that the world would have only a half dozen or so computers. “Mankind is in danger of becoming a passive, machine-serving animal,” warned the formidable social critic Lewis Mumford.

The integrated circuit ended that particular threat. It may turn out that a few giant enterprises will destroy individual freedom and privacy, but it won’t be because of computers. Jack Kilby’s chip has made the computer something that almost anybody can have. Stroll over to Radio Shack and plunk down $159.95 and you can be the proud owner of a six-ounce, eleven-inch-long vest-pocket computer, built around a single chip and driven by penlight batteries, that is faster, more reliable, and more powerful than a multimillion-dollar fifties behemoth that filled a room and consumed power in the thousand-kilowatt range. The turning point may have been the moment in 1975 when IBM, the very arsenal of Big Brotherism, set aside plans for its next series of massive computers and went to work instead on a personal product. You can buy the IBM personal computer today at Sears for $1600 plus tax. Too expensive? This fall your drugstore will be stocking a Timex computer that will retail for $99.95. By 1984, according to industry analysts, about 35 million ordinary people will have computers of their own, and the stereotypical computer user will be a Little Brother seated at the keyboard to write his seventh-grade science report.

It is this mass distribution of computing power that gives rise to the romantic phrase “the second industrial revolution.” The first industrial revolution enhanced man’s physical prowess and freed people from the drudgery of back-breaking manual labor. The revolution spawned by the chip enhances our intellectual prowess and frees people from the drudgery of mind-numbing computational labor. While the computer—to take the simplest example—keeps track of tax changes, pay raises, vacation days, and union dues and grinds out your company’s 50,000 weekly paychecks, the time people once spent on those utterly monotonous chores can be used in endeavors that are more satisfying both personally and commercially.

On His Own

As his inventions prospered, Jack Kilby did, too. He never became seriously rich (unlike his co-inventor, Noyce, who has founded two major companies and accumulated a net worth of about $100 million), but a steady flow of raises, bonuses, and stock options left him comfortably situated. In February 1970, a few weeks after he went to the White House to receive the National Medal of Science, Texas Instruments named him director of engineering and technology. He seemed assured of more bonuses, more raises, and more promotions as long as he stayed at TI. And then, in November 1970, he left.

Over the years, Kilby had been thinking a great deal about the process of invention. It became more and more apparent to him that real creativity demanded real freedom—the kind of freedom that did not mesh with big corporate or governmental bureaucracies. There is “a basic incompatibility of the inventor and the large corporation,” Kilby has written. “Large companies . . . need to know . . . how much it will cost, how long it will take, and above all, what it’s going to do. None of these answers may be apparent to the inventor.” If Edison had worked for a big firm, Kilby once suggested in a lecture on the subject, there might have been no Edison light bulb, because the firm’s goals might not have matched the inventor’s. Kilby had enjoyed relative freedom at Texas Instruments, but even for him bureaucracy was beginning to chafe. His goal had always been to find nonobvious solutions to important problems, but anything nonobvious was anathema to corporate planners and accountants. So he packed up his books, his papers, and his favorite old slide rule and moved out to an office of his own.

He’s still there today, in a cluttered suite in a low-rise office building on Royal Lane in North Dallas, happily and creatively engaged in freelance inventing. The work has provided considerable satisfaction, he says, but “pretty marginal” economic rewards. The scope of his work has widened markedly from his Texas Instruments days. At his wife’s suggestion, he invented and patented a “telephone intercept” gimmick that keeps your phone from ringing unless the call is the one you want to take. For the past few years he has been back at work on semiconductors, trying to design a cheap, efficient household generator that will turn sunshine into electricity.

The idea here is an old one. Physicists have known for more than a century that if you shine light on a semiconductor material, like silicon, and hook up a pair of wires to the silicon, current will flow through the wires. On paper, at least, it appears that there is easily enough room on the average rooftop to generate all the electricity the household requires. Most attempts to take this idea from paper to practice have involved fairly large slabs of silicon. Kilby’s nonobvious approach goes the other way: his solar generator uses silicon pellets about as big as the head of a pin. The problem then becomes how to hook up wires to the tiny pellets; Kilby’s solution is to eliminate the wires. The pellets are packed in tubes of liquid. When the sun shines and current flows, the liquid is broken down by electrolysis into its chemical parts. If you recombine the parts (at nighttime, say, when there’s no sun), the process gives off electricity, current flows, and the lights come on in the house. The project is so promising that Texas Instruments has gotten interested, and Kilby is now temporarily back at his old firm, working in a secured lab (code name: Project Illinois). The first test generator is to be unveiled this fall.

For a man whose notion of heaven is to seize an interesting problem and solve it, Kilby’s lot is a happy one. He leads a quiet and—because he has always done his best work alone—fairly solitary life, traveling from TI to Royal Lane to his handsome three-bedroom home in North Dallas in a white Mercedes 280 SL that is just on the verge of the 100,000-mile mark. His wife, Barbara, died last fall, shortly after the couple’s 33rd anniversary; Kilby spends a fair amount of time with his two grown daughters. He is a voracious reader, working his way through the Dallas Morning News and the Wall Street Journal each morning and studying each issue of Time, Business Week, Electronics, and the Economist. The U.S. Patent Office issues about 70,000 patents every year, and Kilby tries to look at every one of them. “You read everything, that’s part of the job,” the inventor says. “You just never know when some of this stuff might turn out to be useful.” For recreation, Kilby says, “I read trash.”

And, of course, Kilby thinks. It is the one attribute that his colleagues all mention when you ask them to describe Jack Kilby. “I would say Jack has a good creative sense, he’s got that, but the other thing that I liked when I hired him is that he is a persister,” Willis Adcock says. “He just thinks a problem all the way through, works it through, and he doesn’t stop until he’s got it worked out. And, you know, you can see the results.”

You can see the results in Kilby’s more than fifty patents, in the hundreds of companies and the thousands of new products the integrated circuit has spawned, in the awards, prizes, plaques, and citations he has received. The people at Texas Instruments say he doesn’t normally get excited when he wins an award; he’s not that kind of person. But earlier this year Kilby received an honor that really mattered to him, for it was proof that he had succeeded at his chosen trade. He was inducted into the Patent Office’s National Inventors’ Hall of Fame, an august group of four dozen people—Edison, Bell, Ford, Shockley, the Wright brothers—whose inventions have made a difference.

On a sunny Sunday last February, a group of people gathered in the lobby of the Patent Office, just across the Potomac from the Washington Monument, for the hall of fame induction ceremony. Of the five inventors honored this year, only two—Kilby and Max Tishler, who started the vitamin industry in 1941 by synthesizing vitamin B2—are still alive, and both were present. When the Secretary of Commerce called out Tishler’s name, the aging chemist stood up and gave a long speech about how he got his idea and what it had meant. When Kilby’s turn came, he stood up for the briefest moment and said, “Thank you.” That was all. “He really didn’t say a word during the whole thing,” recalls Frederick Ziesenheim, who is the hall of fame’s president. “He just sat there like he was thinking about something. It looked like, no kidding, I sort of thought he was sitting there working out his next invention.”

T. R. Reid, a reporter for the Washington Post, is writing a book about semiconductor chips under a grant from the Alicia Patterson Foundation.

Chips and Money

A brief history of Texas Instruments.

To a lot of people in the semiconductor industry, the name Texas Instruments seems perfectly suited to the brawling, sprawling Dallas-based electronics giant that flaunts its size and wealth in the manner of the archetypal Texan. In fact, the company that was named after Texas was born in Oklahoma and nurtured in New Jersey, and it backed into its current name as an afterthought.

The company first saw the light of day in Tulsa in 1924 under the name Geophysical Research Corporation (GRC). Officially it was a subsidiary of Amerada Petroleum, which put up the money, but its real parents were two physicists, Clarence “Doc” Karcher and Eugene McDermott. They had found that a reflection-seismograph process that used sound waves to map faults and domes within the earth was a terrific way to find hidden oil deposits. They went into business hunting for oil along the Gulf Coast, and by 1930 GRC was the region’s leading geophysical exploration concern.

Karcher and McDermott chafed under Amerada’s control, so in 1930 they moved their operation to Dallas and opened (in partnership with, among others, a future president of the company, Cecil Green) an independent company, which they named Geophysical Service, Inc. Then, as now, the oil business was a sneaky, suspicious world, and GSI’s founders were anxious to do their research and develop their equipment someplace far from their competitors. They opened a lab in a second-story office in Newark and hired a 28-year-old aluminum salesman named J. Erik Jonsson to manage it.

The firm prospered in the thirties; it found so much oil that the founders decided to start an oil company of their own. The new concern, Coronado Corporation, was chartered in 1938, just before World War II broke out. The oil business was uncertain, financing was unavailable, and things looked grim. But Jonsson, who in 1934 had moved to the Dallas headquarters, saved the day. He realized that the echo-tracking techniques developed to search for oil would also work to search for enemy ships and planes. Jonsson pushed GSI into defense business during the war, and when V-J Day came along, the firm was established as an important supplier of military electronics.

One of the people who had been buying the company’s gear was Navy lieutenant Patrick Haggerty, an electrical engineer from North Dakota who made a big impression on Jonsson. When the war ended, Haggerty came to Dallas. Almost immediately he began to display the audacious flair that was his hallmark. To turn a geophysical exploration outfit into a serious electronics manufacturer, Haggerty insisted on a lavish new plant—so lavish that it would eat up just about all of the firm’s $350,000 line of credit at Republic National Bank. Jonsson went along, and the investment paid off nicely. By 1950 the company’s sales were approaching $10 million a year, and most of that was in manufacturing.

Now it was clear that the electronics tail was wagging the oil exploration dog and Geophysical Service needed a new name. After debating long and hard, the executives settled on General Instruments. The name was classic Haggerty—a suggestion that this impudent pup in Dallas could stand with General Electric and the other electronics giants in the East. Everybody in Dallas liked the name, but an important customer, the Pentagon, did not. There was another defense supplier with a similar name, and things were too confusing. In desperation Jonsson fell back on an idea that had been rejected earlier: Texas Instruments.

Haggerty’s next audacious move came in 1951, when the Bell System announced that it would license production rights to its revolutionary new invention, the transistor. TI was one of the first firms to send in the $25,000 fee. It was a surprising—you could say crazy—move for a small firm with almost no scientific resources. Transistors then cost $15 or more apiece, about five times as much as the vacuum tubes they were supposed to replace. The whole field of semiconductor electronics was unsettled, and nobody knew how to make transistors with uniform characteristics in any quantity. Thus the people who should have been buying transistors—the makers of radios, television sets, and computers—were sticking with vacuum tubes.

Undaunted, Haggerty hired a Bell Labs scientist named Gordon Teal and told him to develop a reliable mass-produced transistor that would sell for $2.50. Teal did it, and that inspired Haggerty’s most famous gambit. Committing $2 million to a crash research effort, TI teamed with a small radio manufacturer, Regency, to produce the first portable transistor radio. From its first day in the stores—November 1, 1954—the Regency was a smash hit. It was, after all, smaller, lighter, more reliable, and cheaper ($49.95) than anything else on the market.

More important, the transistor radio demonstrated to the public and to the electronics industry that transistors had arrived. Some TI veterans suggest that Haggerty targeted the whole radio project at a single consumer: Tom Watson of IBM. Whether or not the radio was decisive, IBM in 1955 began to replace its vacuum tube computers with transistorized models, and transistor makers like TI had an enormous new market.

It was Gordon Teal, working with a physical chemist named Willis Adcock, who brokered the marriage between TI and the material that would eventually become its mainstay: silicon. The Regency radio used transistors made of germanium, a semiconductor material that was easy to work with but could not withstand heat. Physicists knew that another semiconductor, silicon, retained its useful characteristics over a large temperature range. But silicon was brittle and hard to purify, and nobody had been able to make transistors out of it. Teal and Adcock tackled that problem.

In May 1954, Teal was scheduled to deliver a paper at a technical meeting in Dayton, Ohio. He heard speaker after speaker explain the insuperable problems that silicon posed. Finally, his turn came. He had listened with interest, Teal said, to the bleak predictions about the future of silicon transistors. “Our company,” he stated calmly, “now has two types of silicon transistors in production. . . . I just happen to have some here in my coat pocket.” Teal went offstage for a moment, returning with a small record player that used a germanium transistor. As a record played, Teal dumped the transistor in a pot of boiling oil on a hot plate in front of him; the sound immediately stopped. Then he wired in one of his silicon transistors and dipped it in the hot oil as the record spun. The music played on. The meeting ended in pandemonium, and TI was on its way to unchallenged leadership in semiconductor manufacturing.

TI’s investment in silicon later prompted Jack Kilby’s invention, in 1958, of the integrated circuit, the device that sparked the modern microelectronics revolution. As a business, Texas Instruments today is a certified giant—its 1981 sales of $4.2 billion placed it 91st in the Fortune 500—but it is a troubled one. Profits dropped by 50 per cent in 1981, and the slump hit hardest at the heart of the company, semiconductors. Analysts estimate that TI lost $50 million on its semiconductor operations last year. In 1982, for the first time, its venerable position as the world’s leading semiconductor maker faces a strong challenge from Motorola. But the company has some bright spots, old and new. Geophysical exploration still accounts for some 15 per cent of its revenues, and the defense business remains strong. A relatively new TI endeavor, metallurgy, has put another Texas Instruments invention in the pocket of every American consumer: the “sandwich coin.” When the U.S. Mint stopped making silver dimes and quarters, it had to keep their replacements the same size and weight for use in vending machines. The answer was copper and zinc pressed into layers in each coin—an idea the government bought from TI.

T.R.R.

What Is a Silicon Chip, Anyway?

Step by step into the second industrial revolution.

- At left is a silicon ingot, made in a furnace from molten silicon that forms around a seed crystal into a pure single-crystal structure. Semiconductor people call it a silver bullet, but it’s really bluish in color, and rather beautiful.

- A machine slices the silicon into wafers, each one about as thick as five pages of this magazine. The wafers then get coated with silicon dioxide, an insulator. Extremely fine patterns—circuit pathways—are etched through the insulator and then filled with conducting materials, laid down by a photolithographic process. The wafer at right contains more than a thousand chips, each of which contains several thousand components.

- A laser beam cuts the wafer into its individual chips; then each chip is mounted on a grid of wire electrical leads, shown greatly enlarged (right) and at about half of actual size (above). For protection, they are encased in airtight black plastic.

- Finally, the grids holding the encased chips are cut apart and the wire leads are bent into shape (left), so that each one is ready to become the brains of an electronic device.

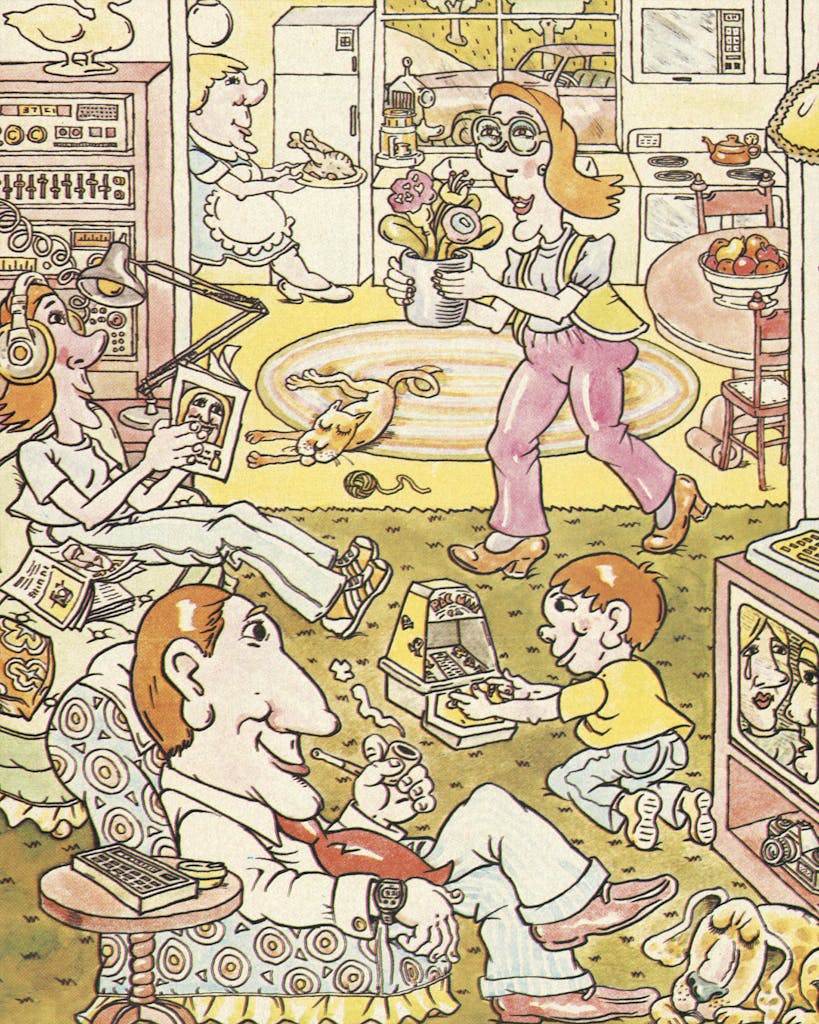

How Many Silicon Chips Can You Find in This Picture?

What do chips mean to folks like you and me? Plenty.

You can’t actually see any of them, but in a brand-new home with brand-new appliances, there are as many as sixty silicon chips. They are used to turn electrical impulses into complex instructions. In this picture, chips are used in (clockwise from lower left): the pocket calculator, the stereo, the refrigerator with a digital readout, the family car, the stove, the microwave oven, the thermostat, the home computer, the TV, the camera, the Pac-Man video game, and Dad’s watch.

- More About:

- TM Classics

- Longreads

- Dallas